C4: The History of Computers

Where a calculator on the ENIAC is equipped with 18,000 vacuum tubes and weighs 30 tons, computers in the future may have only 1,000 vacuum tubes and weigh only 1½ tons. — Popular Mechanics, 1949

Never trust a computer you can't lift. — Stan Mazor, 1970

Computers are such an integral part of our society that it is sometimes difficult to imagine life without them. However, computers as we know them are relatively new devices—the first electronic computers date back only to the 1940s. Over the past 80 years, technology has advanced at an astounding rate. Today, pocket calculators contain many times more memory capacity and processing power than did the mammoth computers of the 1950s and 1960s.

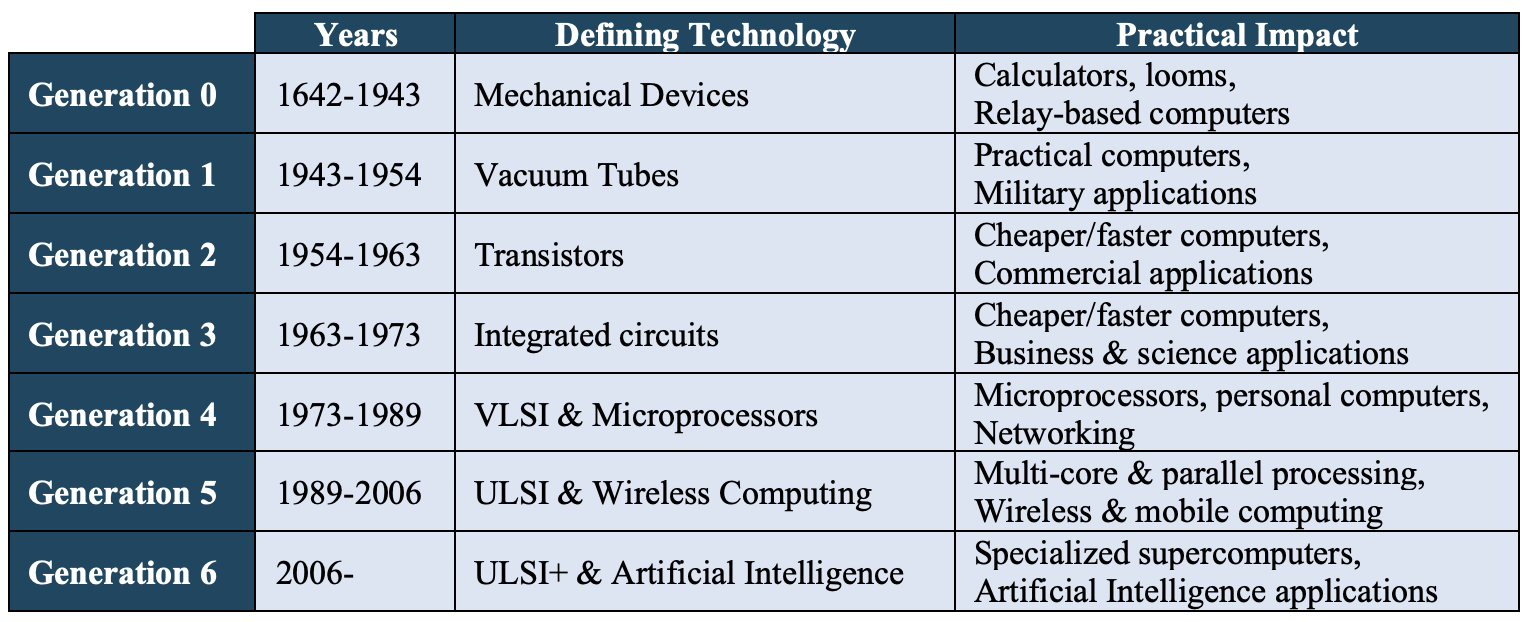

This chapter provides an overview of the history of computers and computer technology, tracing the progression from primitive mechanical calculators to modern PCs and mobile devices. As you will see, the evolution of computers has been rapid, but far from steady. Several key inventions have completely revolutionized computing technology, prompting drastic improvements in computer design, efficiency, and ease of use. Owing to this punctuated evolution, the history of computers is commonly divided into generations, each with its own defining technology (FIGURE 1). Although later chapters will revisit some of the more technical material, this chapter explores history's most significant computer-related inventions, why and how they came about, and their eventual impact on society.

FIGURE 1. Generations of computer technology.

Generation 0: Mechanical Computers (1642-1943)

The 17th century was a period of great scientific achievement, sometimes referred to as "the century of genius." Scientific pioneers such as astronomers Galileo (1564-1642) and Kepler (1570-1630), mathematicians Fermat (1601-1665) and Leibniz (1646-1716), and physicists Boyle (1627-1691) and Newton (1643-1727) laid the foundation for modern science by defining a rigorous, methodical approach to investigation based on a belief in unalterable natural laws. The universe, it was believed, was a complex machine that could be understood through careful observation and experimentation. Owing to this increased interest in science and mathematics, as well as contemporary advancements in mechanics, the first computing devices were invented during this period.

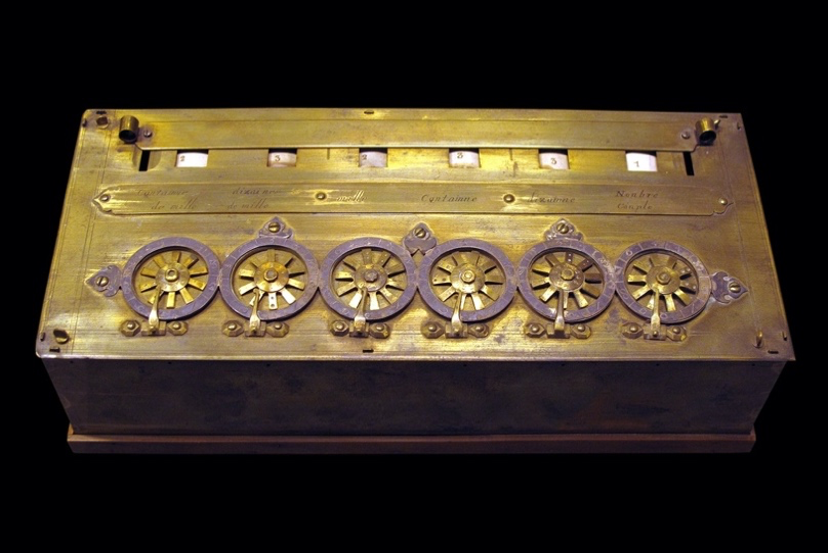

The German inventor Wilhelm Schickard (1592-1635) is credited with building the first working calculator in 1623. However, the details of Schickard's design were lost in a fire soon after the calculator's construction, and historians know little about the workings of this machine. The earliest prototype that has survived is that of the French scientist Blaise Pascal (1623-1662), who built a mechanical calculator in 1642. His machine used mechanical gears and was powered by hand—a person could enter numbers up to six digits long using dials, then turn a crank to either add or subtract (FIGURE 2). Thirty years later, the German mathematician Gottfried Wilhelm von Leibniz (1646-1716) expanded on Pascal's designs to build a mechanical calculator that could also multiply and divide.

FIGURE 2. Pascal's calculator (Rama/Wikimedia Commons).

Although inventors such as Pascal and Leibniz were able to demonstrate the design principles of mechanical calculators, constructing working models was difficult, owing to the precision required in making and assembling all the interlocking pieces. It wasn't until the early 1800s that manufacturing methods improved to the point where calculators could be mass produced and used in businesses and laboratories. A variation of Leibniz's calculator, built by Thomas de Colmar (1785-1870) in 1820, was widely used throughout the 19th century.

✔ QUICK-CHECK 4.1: TRUE or FALSE? Mechanical calculators were designed and built in the 1600s but were not widely used until the 1800s.

Programmable Devices

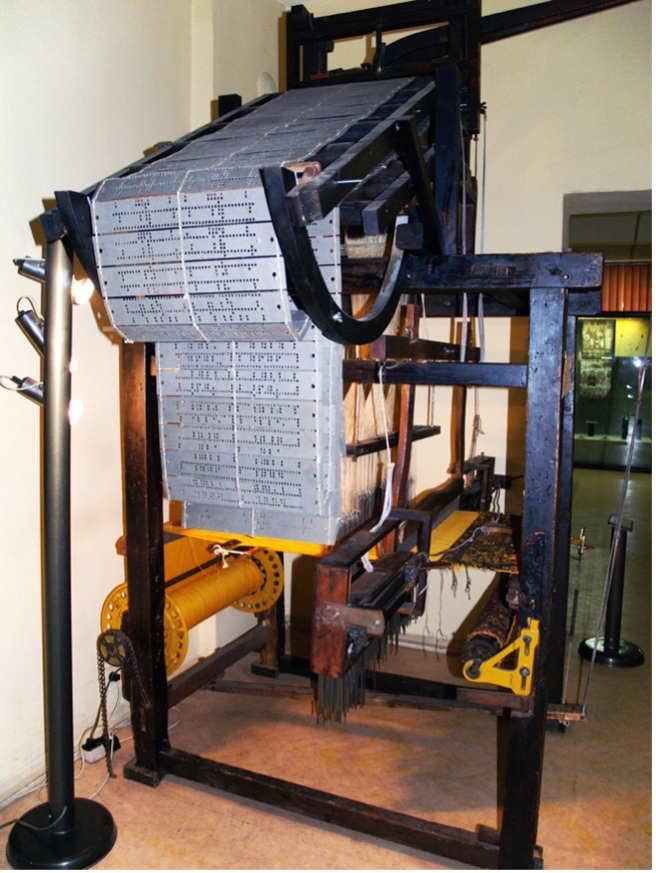

Although mechanical calculators grew in popularity during the 1800s, the first programmable machine was not a calculator at all, but a loom. Around 1801, Frenchman Joseph Marie Jacquard (1752-1834) invented a programmable loom in which removable punch cards were used to represent patterns (FIGURE 3). Before Jacquard designed this loom, producing tapestries and patterned fabric was complex and tedious work. To generate a pattern, loom operators had to manually weave different colored threads (called wefts) over and under the cross-threads (called warps), producing the desired effect. Jacquard devised a way of encoding the thread patterns using metal cards with holes punched in them. When a card was fed through the machine, hooks passed through the holes to raise selected warp threads and create a specific over-and-under pattern.

Using Jacquard's invention, complex patterns could be captured on a series of cards and reproduced exactly. Furthermore, weavers could program the same loom to generate different patterns simply by switching out the cards. In addition to laying the foundation for later programmable devices, Jacquard's loom had a significant impact on the economy and culture of 19th-century Europe. Elaborate fabrics, which were once considered a symbol of wealth and prestige, could now be mass produced, and therefore became affordable to the masses.

FIGURE 3. Jacquard's loom (Edal Anton Lefterov/Wikimedia Commons).

Approximately twenty years later, Jacquard's idea of storing information as holes punched into cards resurfaced in the work of English mathematician Charles Babbage (1791-1871). Babbage incorporated punch cards in the 1821 design of his Difference Engine, a steam-powered mechanical calculator for solving mathematical equations. Because of limitations in manufacturing technology, Babbage was never able to construct a fully functional model of the Difference Engine. However, a prototype that punched output onto copper plates was built and used to compute data for naval navigation. In 1833, Babbage expanded on his plans for the Difference Engine to design a more powerful machine that included many of the features of modern computers. Babbage envisioned this machine, which he called the Analytical Engine, as a general-purpose, programmable computer that accepted input via punched cards and printed output on paper. Like modern computers, the Analytical Engine was to encompass various integrated components, including a readable/ writable memory (which Babbage called the store) for holding data and programs and a control unit (which he called the mill) for fetching and executing instructions.

Although a working model of the Analytical Engine was never completed, its innovative and visionary design was popularized by the writings and patronage of Augusta Ada Byron, Countess of Lovelace (1815-1852). Ada Lovelace was a brilliant mathematician who saw the potential of Babbage's design, noting that "the Analytical Engine weaves algebraical patterns just as the Jacquard-loom weaves flowers and leaves." Her extensive notes on the Analytical Engine included step-by-step instructions to be carried out by the machine; this contribution has since led the computing industry to recognize her as the world's first programmer (FIGURE 4).

FIGURE 4. Portraits of Charles Babbage (Thomas Dewell Scott/Wikimedia Commons) and Ada Lovelace (Alfred Edward Chalon/Wikimedia Commons).

✔ QUICK-CHECK 4.2: TRUE or FALSE? The first programmable machine was a mechanical calculator designed by Charles Babbage.

✔ QUICK-CHECK 4.3: TRUE or FALSE? Ada Lovelace is generally acknowledged as the world's first programmer, because of her work on Babbage's Analytical Engine.

Electromagnetic Relays

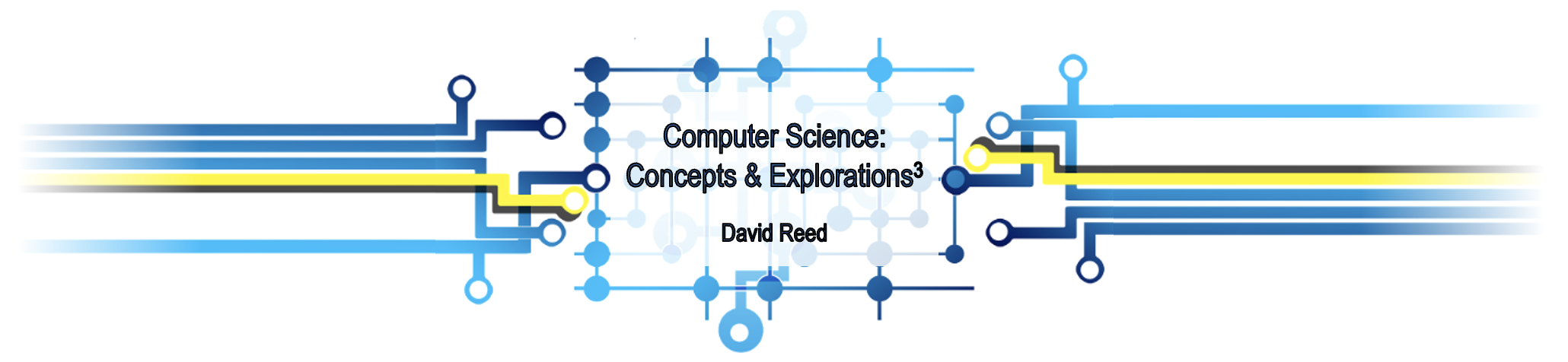

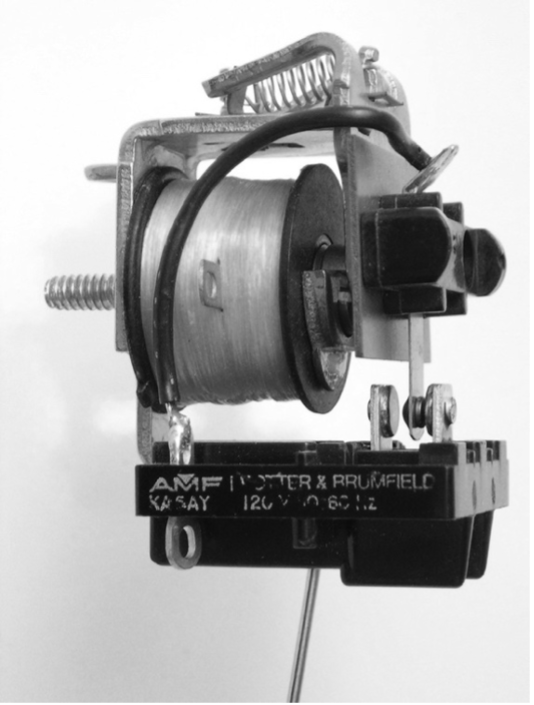

Despite the progression of mechanical calculators and programmable machines, computer technology as we think of it today did not really begin to develop until the 1930s, when electromagnetic relays were used. An electromagnetic relay, a mechanical switch that can be used to control the flow of electricity through a wire, consists of a magnet attached to a metal arm (FIGURE 5, left). By default, the metal arm is in an open position, disconnected from the other metal components of the relay, and thus interrupting the flow of electricity . However, if current is applied to a control wire, the magnetic field generated by the magnet pulls the arm so that it closes, allowing electricity to flow through the relay (FIGURE 5, right).

|

|

FIGURE 5. Electromagnetic relay (Grant Braught); Interactive visualizer.

✔ QUICK-CHECK 4.4: Select 5V in the interactive visualizer to see how the electromagnetic relay behaves as an electrical switch, turning "on" the output wire when the control wire is "on".

Electromagnetic relays were used extensively in early telephone exchanges to control the connections between phones. In the 1930s, researchers began to utilize these simple electrical switches to define the complex logic that controls a computer. German engineer Konrad Zuse (1910-1995) is credited with building the first relay-powered computer in the late 1930s. However, his work was classified by the German government and eventually destroyed during World War II; thus, it did not influence other researchers. During the same period, John Atanasoff (1903-1995) at Iowa State University and George Stibitz (1904-1995) at Bell Labs independently designed and built computers using electromagnetic relays. In the early 1940s, Harvard University's Howard Aiken (1900-1973) rediscovered Babbage's designs and applied some of Babbage's ideas to modern technology, culminating in the construction of the Mark I computer in 1944.

When compared to that of modern computers, the speed and computational power of these early machines might seem primitive. For example, the Mark I computer could perform a series of mathematical operations, but its computational capabilities were limited to addition, subtraction, multiplication, division, and various trigonometric functions. It could store only 72 numbers in memory and required roughly one-tenth of a second to perform an addition, 6 seconds to perform a multiplication, and 12 seconds to perform a division. Nevertheless, the Mark I represented a major advance in that it could complete complex calculations much faster than humans or mechanical calculators.

✔ QUICK-CHECK 4.5: TRUE or FALSE? An electromagnetic relay is a mechanical switch that can be used to control the flow of electricity through a wire.

Generation 1: Vacuum Tubes (1943-1954)

Although electromagnetic relays certainly function much faster than wheels and gears, relay-based computing still required the opening and closing of mechanical switches. Thus, computing speeds were limited by the inertia of moving parts. Relays also posed reliability problems, as they tended to jam. A classic example is a 1947 incident involving the Harvard Mark II, in which a computer failure was eventually traced to a moth that had become wedged between relay contacts. Grace Hopper (1906-1992), who was a research fellow on the project, taped the moth into the computer logbook and facetiously noted the "First actual case of bug being found."

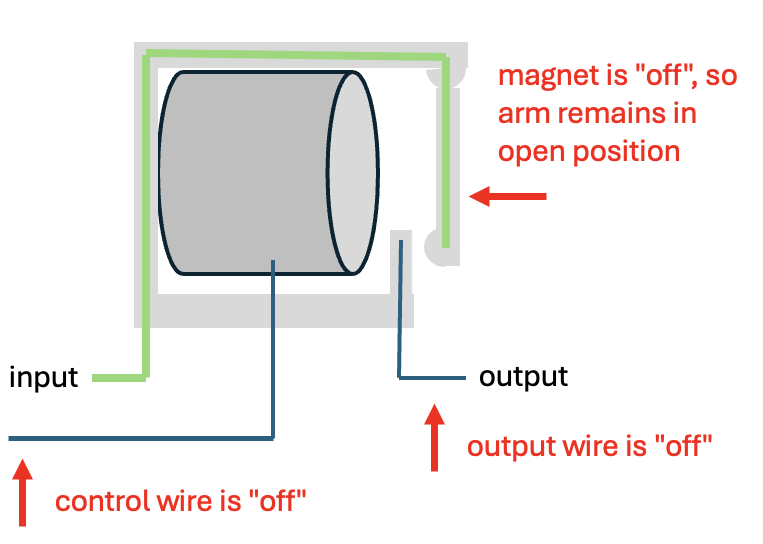

During the mid-1940s, computer designers began to replace electromagnetic relays with vacuum tubes, small glass tubes from which most of the gas has been removed (FIGURE 6, left). A vacuum tube is similar to an incandescent light bulb, with a metal filament that glows when electrical current (i.e., electrons) flows through it, but with two key differences. One, the partial vacuum within the tube makes it easier for electrons to flow across the filament, since there is minimal interference from gas molecules. Two, the filament has a tiny break in it that, by default, prevent electrons from flowing across it. However, applying electrical current to a control wire excites the remaining gas molecules in the tube, providing enough energy for electrons to bridge the gap and flow through the filament (FIGURE 6, right).

|

|

FIGURE 6. Vacuum tubes (djyan2/Pixabay); Interactive visualizer.

✔ QUICK-CHECK 4.6: Select 5V in the interactive visualizer to see how the vacuum tube behaves as an electrical switch, turning "on" the output wire when the control wire is "on".

Although vacuum tubes had been invented in 1906 by Lee de Forest (1873-1961), they did not represent an affordable alternative to relays until the 1940s, when improvements in manufacturing reduced their cost significantly. A vacuum tube is similar in function to an electromagnetic relay, acting as a switch that controls the flow of electricity. However, because vacuum tubes have no moving parts (only the electrons move), they enable the switching of electrical signals up to 1,000 times faster than relays.

Computing and World War II

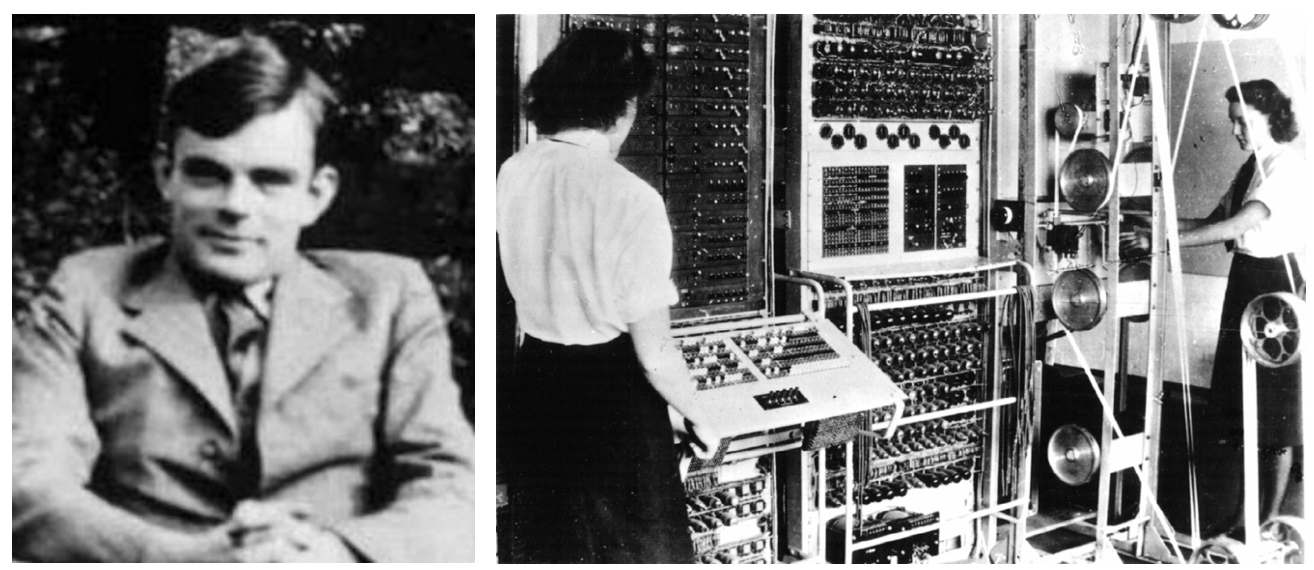

The development of the electronic (vacuum tube) computer, like many other technological inventions, was hastened by World War II. Computer pioneer Alan Turing (1912-1954) worked out many of the basic concepts of modern computers while serving at Bletchley Park, British Intelligence's code-breaking headquarters. He invented an electro-mechanical device called the Bombe which was used to decode Nazi communications that had been encoded with the previously unbreakable Enigma machine. Building on Turing's work and ideas, the British government built the first electronic computer, COLOSSUS, to automate the breaking of Nazi codes (FIGURE 7). COLOSSUS contained more than 2,300 vacuum tubes and was uniquely adapted to its intended purpose as a code breaker. It included five different processing units, each of which could read in and interpret 5,000 characters of code per second. Using COLOSSUS, British Intelligence was able to decode many Nazi military communications, providing invaluable support to Allied operations during the war. In fact, some historians estimate that Turing and his code-breaking machines shortened the war by two to four years, saving as many as 28 million lives.

FIGURE 7. Alan Turing, circa 1930; The COLOSSUS at Bletchley Park, England (UK National Archives).

Unfortunately, the work of Turing and his fellow codebreakers was classified by the British government for more than 30 years, denying them much-deserved credit and limiting the influence this work had on other researchers. Turing was awarded the Order of the British Empire after the war and worked on several ground-breaking computer projects over the next decade. His life ended tragically in 1954 — after years of being persecuted as a homosexual, he committed suicide. In 2009, the British government made an official public apology for the way that Turing was treated and enacted the "Alan Turing Law," which retroactively pardoned all who had been convicted of homosexuality in Britain.

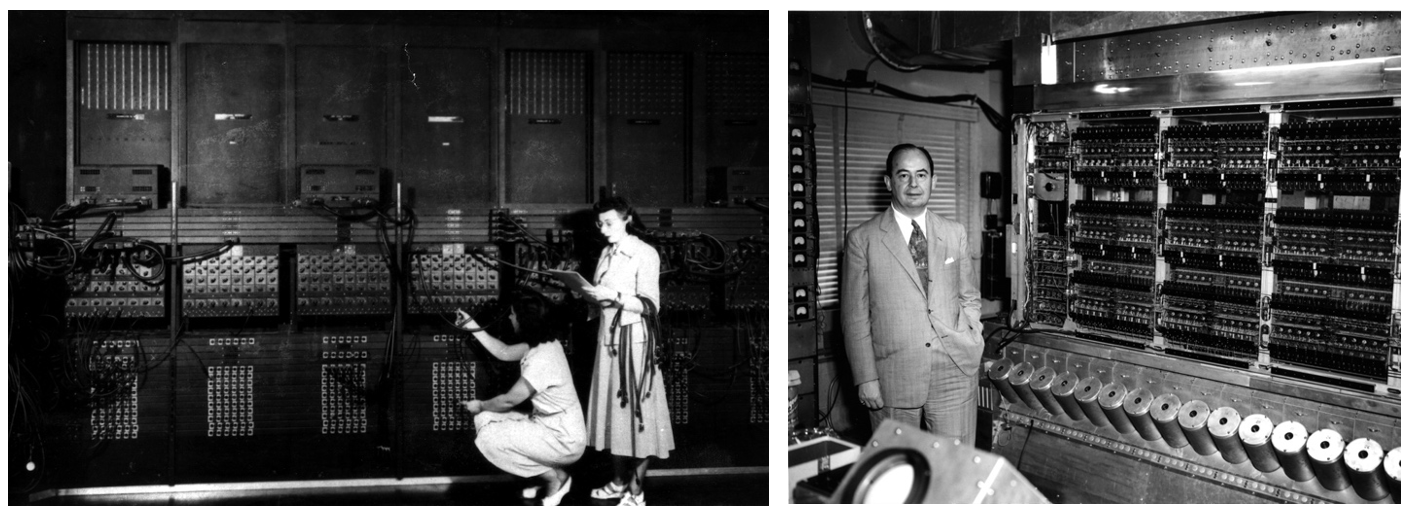

At roughly the same time in the United States, John Mauchly (1907-1980) and J. Presper Eckert (1919-1995) were building an electronic computer called ENIAC (Electronic Numerical Integrator And Computer) at the University of Pennsylvania (FIGURE 8). The ENIAC was designed to compute ballistics tables for the U.S. Army, but it was not completed until 1946. The machine consisted of 18,000 vacuum tubes and 1,500 relays, weighed 30 tons, and required 140 kilowatts of power. In some respects, the ENIAC was less advanced than its predecessors — it could store only 20 numbers in memory, whereas the Mark I could store 72. On the other hand, the ENIAC could perform more complex calculations than the Mark I could and operated up to 500 times faster (up to 5,000 additions per second). Another advantage of the ENIAC was that it was programmable, meaning that it could be reconfigured to perform different computations. However, programming the machine involved manually setting as many as 6,000 multi-position switches and reconnecting cables. Mastering this complex and challenging task largely fell to a team of six women mathematicians: Jean Jennings, Marlyn Wescoff, Ruth Lichterman, Betty Snyder, Frances Bilas, and Kay McNulty. Given the ENIAC blueprints and told to "figure out how the machine works and then figure out how to program it," these computing pioneers deserve much of the credit for the success of the ENIAC (FIGURE 8, left).

FIGURE 8. Programmers reconfiguring the ENIAC computer (U.S. Army); John von Neumann with the IAS computer (Archives of the Institute for Advanced Studies).

✔ QUICK-CHECK 4.7: TRUE or FALSE? Although they were large and expensive by today's standards, early computers such as the MARK I and ENIAC were comparable in performance (memory capacity and processing speed) to modern desktop computers.

The von Neumann Architecture

Among the scientists involved in the ENIAC project was John von Neumann (1903-1957), who, along with Turing, is considered one of computer science's founding figures (FIGURE 8, right). Von Neumann recognized that programming via switches and cables was tedious and error prone. To address this problem, he designed an alternative computer architecture in which programs could be stored in memory along with data. Although Charles Babbage initially proposed this idea in his plans for the Analytical Engine, von Neumann is credited with formalizing it in accordance with modern designs (see Chapter C1 for more details on the von Neumann architecture). Von Neumann also introduced the use of binary (base 2) representation in memory, which provided many advantages over decimal (base 10) representation, which had been employed previously. The von Neumann architecture was first used in vacuum tube computers such as EDVAC (Eckert and Mauchly at Penn, 1952) and IAS (von Neumann at Princeton, 1952), and it continues to form the basis for nearly all modern computers.

Once computer designers adopted von Neumann's "stored-program" architecture, the process of programming computers became even more important than designing them. Before von Neumann, computers were not so much programmed as they were wired to perform a particular task. However, through the von Neumann architecture, a program could be read in (via punch cards or tapes) and stored in the computer's memory. At first, programs were written in machine language, sequences of 0s and 1s that corresponded to instructions executed by the hardware. This was an improvement over rewiring, but it required programmers to write and manipulate pages of binary numbers — a formidable and error-prone task. In the early 1950s, computer designers introduced assembly languages, which simplified the act of programming somewhat by substituting mnemonic names for binary numbers. (See Chapter C6 for more on machine and assembly language programming.)

The early 1950s also marked the beginnings of the commercial computer industry. Eckert and Mauchly left the University of Pennsylvania to form their own company and, in 1951, the Eckert-Mauchly Computer Corporation (later a part of Remington-Rand, then Sperry-Rand) began selling the UNIVAC I computer. The first UNIVAC I was purchased by the U.S. Census Bureau, and a subsequent UNIVAC I captured the public imagination when CBS used it to predict the 1952 presidential election. Several other companies soon joined Eckert-Mauchly and began to market computers commercially.

✔ QUICK-CHECK 4.8: TRUE or FALSE: Computers that utilize the von Neumann architecture store both data and programs in memory.

Generation 2: Transistors (1954-1963)

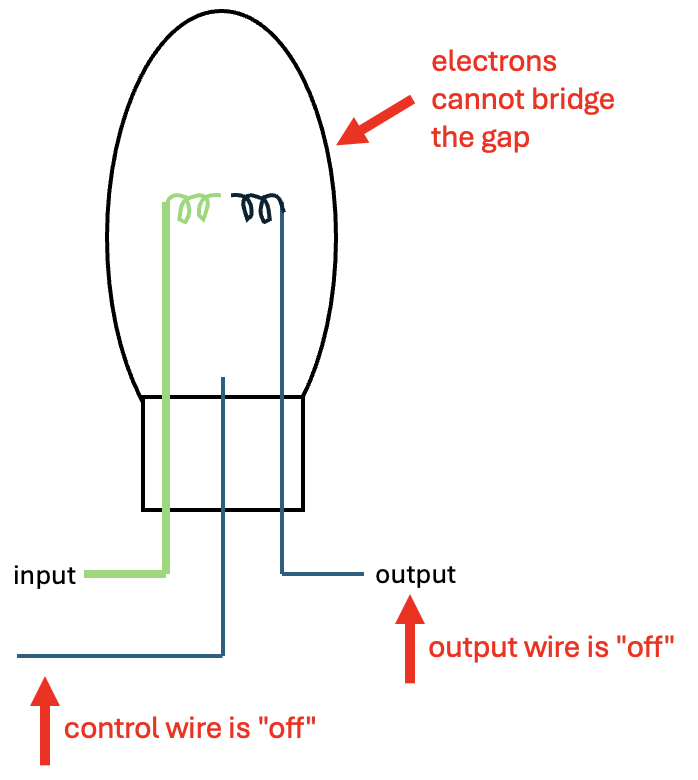

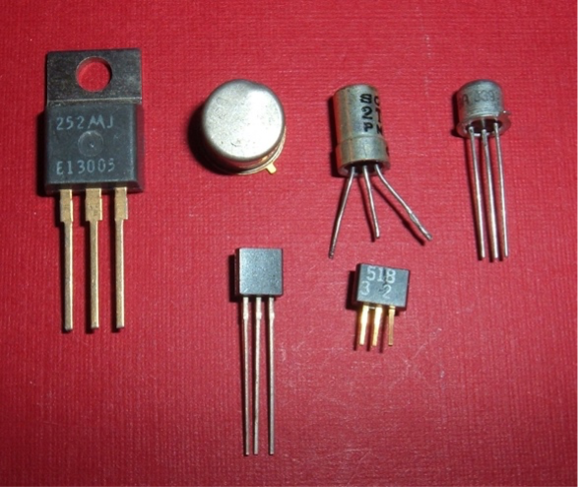

As computing technology evolved throughout the early 1950s, the disadvantages of vacuum tubes became more apparent. In addition to being relatively large (several inches long), vacuum tubes dissipated an enormous amount of heat, which meant that they required substantial space for cooling and tended to burn out frequently. The next major advance in computer technology was the replacement of vacuum tubes with transistors (FIGURE 9, left). Invented by John Bardeen (1908-1991), Walter Brattain (1902-1987), and William Shockley (1910-1989) in 1948, a transistor is a piece of metal whose conductivity can be turned on and off using an electric current. Transistors utilize semi-conducting metal (originally germanium, but now silicon) treated with impurities and layered to produce the desired switching effect. In its default state, the semiconductor material in the transistor conducts electricity - equivalent to a lit vacuum tube or a closed relay. When an electrical current is applied along a control wire, however, its molecular properties change, and it no longer conducts electricity - equivalent to an unlit vacuum tube or an open relay (FIGURE 9, right). While comparable in function, transistors were much smaller, cheaper, more reliable, and more energy efficient than vacuum tubes. Thus, the transistors' introduction allowed computer designers to produce smaller, faster machines at a drastically lower cost.

|

|

FIGURE 9. Transistors (Arnold Reinhold/Wikimedia Commons); Interactive visualizer.

✔ QUICK-CHECK 4.9: Select 5V in the interactive visualizer to see how the transistor behaves as an electrical switch, turning "off" the output wire when the control wire is "on".

Many experts consider transistors to be the most important technological development of the 20th century. Transistors spurred the proliferation of countless small and affordable electronic devices — including radios, televisions, phones, and computers, as well as the information-based, media-reliant economy that accompanied these inventions. The scientific community recognized the potential impact of transistors almost immediately, awarding Bardeen, Brattain, and Shockley the 1956 Nobel Prize in Physics. The first transistorized computers, Sperry-Rand's LARC and IBM's STRETCH, were supercomputers commissioned in 1956 by the Atomic Energy Commission to assist in nuclear research. By the early 1960s, companies such as IBM, Sperry-Rand, and Digital Equipment Corporation (DEC) began marketing transistor-based computers to private businesses.

High-Level Programming Languages

As transistors enabled the creation of more affordable computers, even more emphasis was placed on programming. If people other than engineering experts were going to use computers, interacting with the computer would have to become simpler. In 1957, John Backus (1924-2007) and his group at IBM introduced the first high-level programming language, FORTRAN (FORmula TRANslator). This language allowed programmers to work at a higher level of abstraction, specifying computer tasks via mathematical formulas instead of machine-level instructions. For example, the following simple FORTRAN program reads in two numbers and displays their sum:

PROGRAM add

READ *, a,b

s = a + b

PRINT *, ' The sum is ', s

STOP

END

High-level languages like FORTRAN greatly simplified the task of programming, although IBM's original claims that FORTRAN would "eliminate coding errors and the debugging process" were overly optimistic. FORTRAN was soon followed by other high-level languages, including LISP (John McCarthy at MIT, 1959), COBOL (Grace Hopper at the Department of Defense, 1960), and ALGOL 60 (U.S./European joint committee, 1960).

✔ QUICK-CHECK 4.10: TRUE or FALSE? Because transistors were smaller and produced less heat than vacuum tubes, they allowed for the design of smaller and faster computers.

Generation 3: Integrated Circuits (1963-1971)

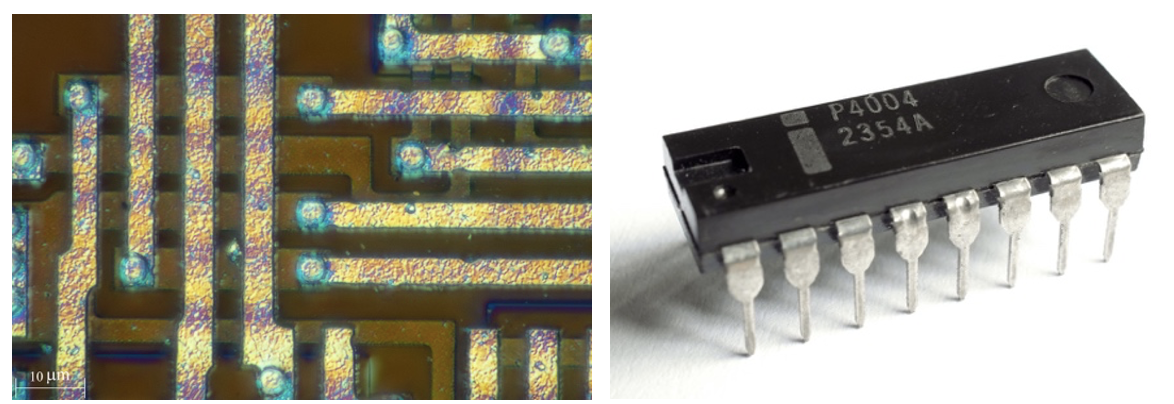

Transistors represented a major improvement over the vacuum tubes they replaced: they were smaller, cheaper to mass produce, and more energy efficient. By wiring transistors together, a computer designer could build circuits that performed specific computations. However, even simple calculations, such as adding two numbers, could require complex circuitry involving hundreds of transistors. Linking transistors together via wires was tedious and limited the reduction of transistor size (because a person had to be able to physically connect wires between transistors). In 1958, Jack Kilby (1923-2005) at Texas Instruments and Robert Noyce (1927-1990) at Fairchild Semiconductor Corporation independently developed techniques for mass producing much smaller, interconnected transistors. Instead of building individual transistors and connecting wires, Kilby and Noyce proposed manufacturing the transistors and their connections together as metallic patterns on a silicon chip. As both researchers demonstrated, transistors could be formed out of layers of conductive and nonconductive metals, while their connections could be made as tracks of conductive metal (FIGURE 10, left).

As building this type of circuitry involved layering the transistors and their connections together during a circuit's construction, the transistors could be made much smaller and placed closer together than before. Initially, tens or even hundreds of transistors could be layered onto the same chip and connected to form simple circuits. This type of chip, known as an integrated circuit or IC chip, is packaged in plastic and accompanied by external pins that connect to other components (FIGURE 10, right).

FIGURE 10. Silicon tracks connecting transistors in an integrated circuit (Alexander Klepnev/Wikimedia Commons); plastic packaging with metal pins as connectors (Thomas Nguyen/Wikimedia Commons).

The ability to package transistors and related circuitry on mass-produced IC chips made it possible to build computers that were smaller, faster, and cheaper. Instead of starting with transistors, an engineer could build computers out of prepackaged IC chips, which simplified design and construction tasks. In recognition of his work in developing the integrated circuit, Jack Kilby was eventually awarded the 2000 Nobel Prize in Physics.

✔ QUICK-CHECK 4.11: TRUE or FALSE: An integrated circuit combines transistors and their connection in a single package

Computing for Business and Science

Whereas only large corporations could afford computers in the early 1960s, integrated circuit technology allowed manufacturers to lower computer prices significantly, enabling small businesses to purchase their own machines. This meant programming needed to become simpler and more intuitive to support less technically minded users. In the 1950s, the common programming process involved specialized hardware devices for writing code on paper cards or magnetic tape, a central computer lab where the program cards or tapes were physically submitted to technicians who collected the programs, fed them to the computer, and then returned output reports to the programmers. It was a tedious, labor-intensive process, and one in which the time between submitting a program and seeing its results could be hours or even days. In the early 1960s, time-sharing computers became popular, which allowed multiple users to interact directly with the computer via keyboards and monitors. While these computers could only execute one program at a time, it was possible to share the processor by swapping quickly from one program to the next. If the swapping was fast enough, this would create the illusion that all programs were running simultaneously, and each user would feel like they had direct control over the computer.

Time-sharing required complex software to manage all the user programs, swapping them in and out of memory and making sure that no information was lost. This software became known as an operating system. Operating systems grew in complexity throughout the 1960s, encompassing programs to not only manage time-sharing but also organize the file structure and coordinate with devices such as keyboards, monitors, and printers (see Chapter C1). Specialized programming languages were also developed to fill the needs of computing's new, broader base of users. BASIC (John Kemeny and Thomas Kurtz, 1964) was designed to be a simple programming language that even beginners could learn. Other languages such as BCPL (Martin Richards, 1967), ALGOL 68 (U.S./European joint committee, 1968). and Pascal (Niklaus Wirth, 1970) followed and were widely used.

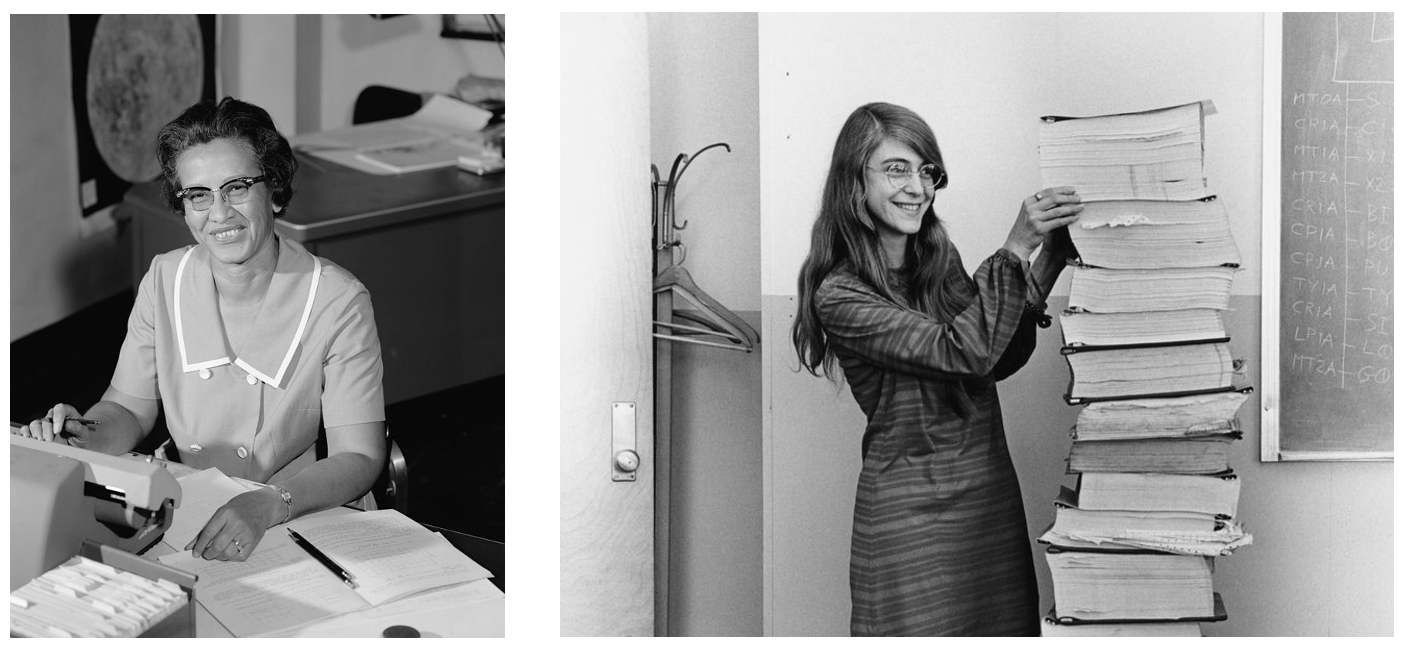

During this same period, the American space program was another driving force in the development of computer technology. In the late 1950s and early 1960s, much of the work of calculating complex orbital mechanics was done by hand. Mathematician Katherine Johnson (FIGURE 11, left), whose career was portrayed in the 2016 movie Hidden Figures, was one of the first African American women to work as a NASA scientist and is credited with being central to the success of the early space program. She also pioneered the use of computers to take over many computational tasks. By the late 1960s, computers were used extensively to calculate orbits and control flight systems. Computer scientist Margaret Hamilton led a team from MIT that developed the on-board flight software that landed astronauts on the moon. FIGURE 11 (right) shows Hamilton standing next to a stack of program listings for the Apollo Guidance Computer.

FIGURE 11. Katherine Johnson, who calculated orbits for early NASA spaceflights; Margaret Hamilton, who led the development team for the Apollo on-board flight software (NASA/Wikimedia Commons).

✔ QUICK-CHECK 4.12: TRUE or FALSE: ON a time-sharing computer, multiple users can share access to the device, with the processor swapping in and out of jobs.

Generation 4: VLSI & Microprocessors (1971-1989)

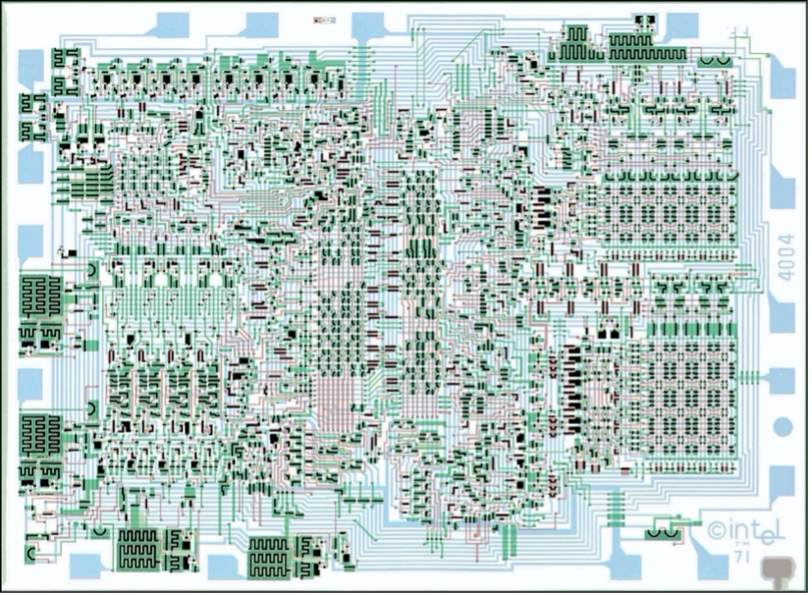

As manufacturing technology improved, the number of transistors that could be mounted on a single chip increased dramatically. In 1965, Gordon Moore (1929-2023) of Intel Corporation noticed that the number of transistors that could fit on a chip seemed to double every 1-2 years. This trend, which became known as Moore's Law, continued to be an accurate predictor of technological advancements for decades. By the 1970s, the very large scale integration (VLSI) of thousands of transistors on a single IC chip became possible. In 1971, Intel took the logical step of combining all the control circuitry for a calculator onto an IC chip, the Intel 4004. This chip contained more than 2,300 transistors and their connections (FIGURE 12). Since the single IC chip contained all the transistors and circuitry for controlling the device, it became known as a microprocessor (eventually shortened to just processor).

FIGURE 12. Microscopic image of the Intel 4044 microprocessor circuitry (Tim McNerney/Intel).

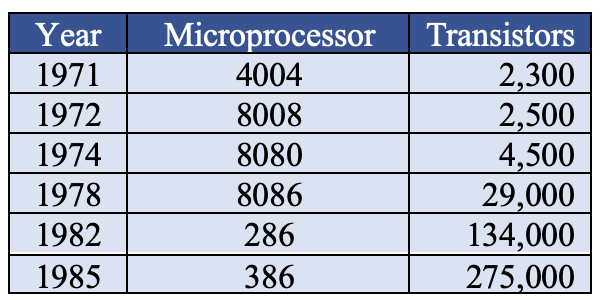

Three years later, Intel released the 8080 microprocessor, which contained 6,000 transistors and could be programmed to perform a wide variety of computational tasks. The Intel 8080 microprocessor and its successors, the 8086 and 8088 chips, served as central processing units for numerous personal computers in the 1970s and 1980s. Other chip vendors, including Texas Instruments, National Semiconductors, Fairchild Semiconductors, and Motorola, also began producing microprocessors during this period. Advancements in microprocessor technology can be seen in FIGURE 13, which lists information on microprocessors manufactured by the Intel Corporation from 1971 to 1985. It is interesting to note that the first microprocessor, the 4004, had approximately the same number of switching devices (transistors) as did the 1943 COLOSSUS computer, which used vacuum tubes.

FIGURE 13. Numbers of transistors in Intel microprocessors (1971-1985).

✔ QUICK-CHECK 4.13: TRUE or FALSE? A microprocessor is a special-purpose computer that is used to control scientific machinery.

The Personal Computer Revolution

Once advances in manufacturing enabled the mass production of microprocessors, the cost of computers dropped to the point where individuals could afford them. The first personal computer (PC), the MITS Altair 8800, was marketed in 1975 for less than $500. In reality, the Altair was a computer kit consisting of all the necessary electronic components, including the Intel 8080 microprocessor that served as the machine's central processing unit. Customers were responsible for wiring and soldering these components together to assemble the computer. Once constructed, the Altair had no keyboard, no monitor, and no permanent storage—the user entered instructions directly by flipping switches on the console and viewed output as blinking lights. Despite these limitations, demand was overwhelming.

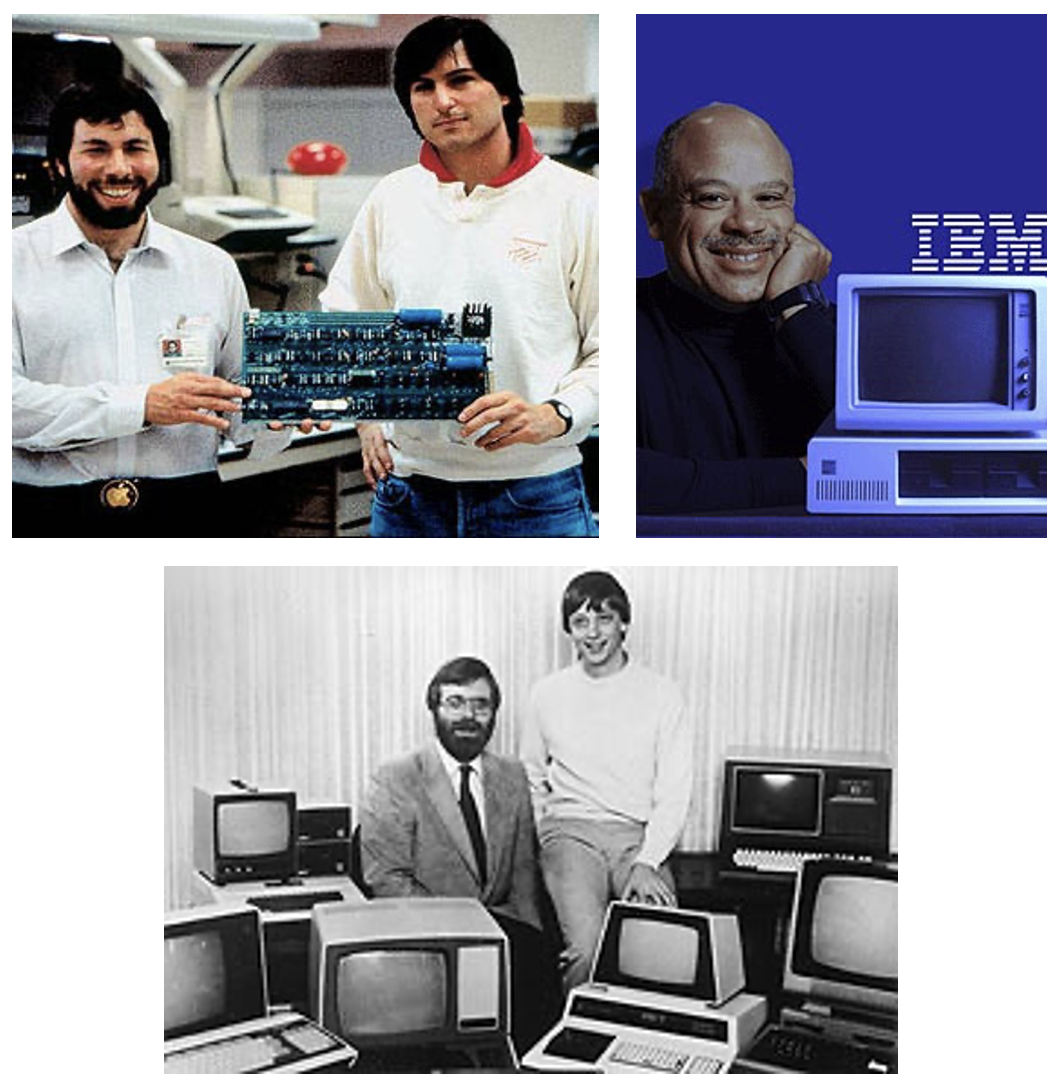

Although the company that sold the Altair folded within a few years, other small computer companies were able to successfully navigate the PC market during the late 1970s. In 1976, Steven Jobs (1955-2011) and Stephen Wozniak (1950-) started selling a computer kit similar to the Altair, which they called the Apple (FIGURE 14, left). In 1977, the two founded Apple Computers and began marketing the Apple II, the first preassembled personal computer that included a keyboard, color monitor, sound, and graphics. By 1980, Apple's annual sales of personal computers reached nearly $200 million. Other companies such as Tandy, Amiga, and Commodore soon began promoting their own versions of the personal computer. IBM, which was a dominant force in the world of business computing, introduced the IBM PC in 1981. Co-developed by Mark Dean, the first African American to be named an IBM Fellow (FIGURE 14, right), the IBM PC had an immediate impact on the personal computer market and influenced the design of many computers to come. In 1984, Apple countered with the Macintosh, which introduced the now familiar graphical user interface of windows, icons, pull-down menus, and a mouse pointer.

FIGURE 14. Steve Wozniak and Steve Jobs in 1978 (Apple Inc.); Mark Dean and the IBM PC (IT History Society); Paul Allen and Bill Gates in 1981 (Microsoft).

Throughout the early years of computing, software was mostly developed by large companies, such as IBM and Hewlett-Packard, and distributed along with hardware systems. With the advent of personal computers, a separate software industry grew and adapted. Bill Gates (1955-) and Paul Allen (1953-2018) are credited with writing the first commercial software for personal computers, an interpreter for the BASIC programming language that ran on the Altair (FIGURE 14, bottom). The two founded Microsoft in 1975, while Gates was a freshman at Harvard, and built the company into the software giant it is today. Much of Microsoft's initial success can be attributed to Gate's recognition that PC software could be developed and marketed separately from the computers themselves. Microsoft developed the MS-DOS operating system as well as popular applications programs, which it licensed to hardware companies such as IBM. As new versions of the software were developed, Microsoft was able to sell the upgrades directly to computer owners and continue to do so over the life of that computer. By the mid-1990s, Microsoft Windows (the successor to MS-DOS) and applications such as Word and Excel were widely adopted by PC users, and Bill Gates had become the richest person in the world.

✔ QUICK-CHECK 4.14: TRUE or FALSE? The first personal computer was the IBM PC, which first hit the market in 1980.

Networking and Object-Oriented Programming

Until the 1980s, most computers were stand-alone devices, not connected to other computers. Small-scale networks of computers, or local area networks (LANs), existed at large companies, but tended to use proprietary technology and were not available to personal computer users. In the 1980s, the Ethernet family of technologies were introduced, providing low-cost options for building local area networks and allowing computers to communicate and share information. Modern Ethernet networks can provide impressive communication speeds over short distances — information can be transmitted at 1 to 10 Gbits (billion bits) per second, depending on the specific technology used.

To connect computers across larger distances, a wide-area network (WAN) is required. The first WAN was the ARPANET, which eventually became known as the Internet (Chapter C3). From its inception with four connected computers in 1969, the ARPANET grew at a slow but steady pace during the 1970s and early 1980s. For example, by 1982 there were still only 235 connected computers. The PC revolution quickly led to the demand for connectivity so that individuals could communicate and share resources. Throughout the 1980s universities and small businesses rushed to join the Internet, largely to take advantage of electronic mail (email) and various file sharing applications. By 1989, almost 300,000 computers were connected to the Internet, and computers were well on their way to becoming communications tools as well as computational tools.

The 1970s and 1980s also produced a proliferation of new programming languages, including those with an emphasis on object-oriented programming methodologies. Object orientation is an approach to software development in which the programmer models software components after real-world objects. In 1980, Alan Kay (1940-) developed Smalltalk, the first object-oriented language. Ada, a programming language developed for the Department of Defense to be used in government contracts, was also introduced in 1980. In 1985, Bjarne Stroustrup (1950-) developed C++, an object-oriented extension of the C language (Dennis Ritchie, 1972). C++ and its descendants Java (Sun Microsystems, 1995) and C# (Microsoft, 2001) have become the dominant languages in commercial software development today.

✔ QUICK-CHECK 4.15: Ethernet was the name of the first long-distance computer network that evolved into today\'s Internet.

Generation 5: ULSI & Wireless Computing (1989-2006)

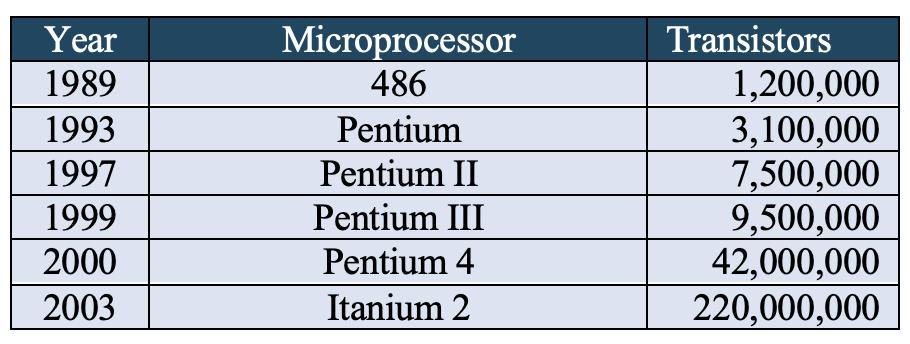

Similar to its predecessor, Generation 5 is defined by a manufacturing breakthrough. By 1989, the design and manufacturing process for integrated circuits had improved to where millions of transistors could fit on a single IC chip. This million-transistor threshold is commonly referred to as ultra large scale integration (ULSI). The first ULSI microprocessor, the Intel 486, was released in 1989 with 1.2 million transistors — more than 500 times as many as the Intel 4004 18 years earlier. FIGURE 15 shows how the number of transistors in microprocessors increased over the next decade and a half.

FIGURE 15. Numbers of transistors in Intel microprocessors (1989-2003).

Comparing the numbers in FIGUREs 13 and 15 does show an interesting trend. In FIGURE 13, which lists transistor counts for Intel processors from 1971 to 1985, Moore's Law is followed fairly consistently. That is, the number of transistors on a chip roughly doubles every 1 to 2 years. The growth trend is more erratic from 1989 to 2003 (FIGURE 15). For example, between 1997 and 1999, the number of transistors increased from 7.5 million (Pentium II) to only 9.5 million (Pentium III). However, there were huge leaps to 42 million transistors (Pentium 4) in 2000 and 220 million transistors (Itanium 2) in 2003. As transistor counts grew, it became more difficult to squeeze transistors even closer together, and so advances in miniaturization became less frequent.

Fortunately, new directions in computer design have emerged that promise continued growth in computer power. Multi-core processors and parallel processing architectures allow for faster and more powerful computers even if transistor density is approaching its physical limits. Chapter C1 introduced the concept of a multi-core processor, a processor that contains duplicate circuitry to enable it to execute multiple operations simultaneously. Most modern processors are multi-core, making them larger than their predecessors but able to complete more work in the same amount of time. The first multi-core processor was the IBM Power4, first released in 2001. Today, personal computers with four to ten cores are common, and high-end computers can have as many as 72 cores (Intel's Xeon Phi).

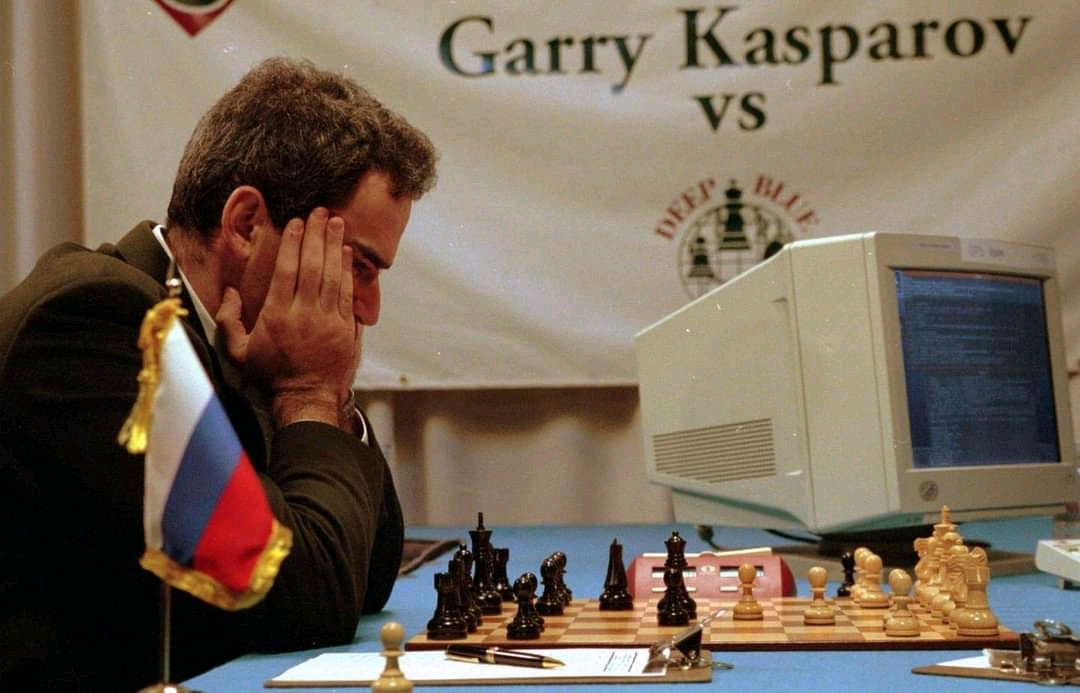

Parallel processing is a related but distinct approach in which a computer is designed with multiple (sometimes hundreds or thousands of) independent processors. By using independent processors, parallel processing machines are more complex than a computer using a single multi-core processor but are better able to manage simultaneous operations. For example, commercial Web servers often utilize parallel processing to distribute the load when hundreds or thousands of requests arrive simultaneously. A more extreme implementation of parallel processing was the IBM Deep Blue chess-playing computer developed in the mid 1990s. Deep Blue contained 32 general-purpose processors and 512 special-purpose chess processors, each of which could work in parallel to evaluate potential chess moves. Together, these processors could consider an average of 200 million possible chess moves per second! In 1997, Deep Blue became the first computer to beat a world champion in a chess tournament, defeating Garry Kasparov in six games (FIGURE 16).

FIGURE 16. IBM Deep Blue defeats Gary Kasparov in 1996 (imgur.com).

✔ QUICK-CHECK 4.16: TRUE or FALSE? Moore's Law predicts that the number of transistors that can be manufactured on a computer chip doubles every 1 to 2 years.

Wireless and Mobile Computing

Computer networks changed in the late 1990s due to the rise of wireless computing. Instead of physical wires or cables, Wi-Fi (Wireless Fidelity) networks utilize radio waves over short distances to connect computers with each other. This allows users to move freely with a laptop or tablet device and easily transport computers from work to home. (See Chapter C3 for a description of the Internet of Things.)

To connect to a Wi-Fi network, a computer must have a wireless adapter that translates data into a radio signal and broadcasts that signal using an antenna. A wireless access point, or Wi-Fi router, receives that signal, decodes it, and passes it through to the Internet through a wired Ethernet connection. In the other direction, the Wi-Fi router receives data from the Internet, translates it into a radio signal, and broadcasts the signal back to the computer. The convenience of wireless connectivity comes at a cost, however. Wi-Fi networks can transmit and receive data at maximum of 1 Gbit (billion bits) per second, significantly less than the 10 Gbits per second achievable with wired Ethernet. If the network is congested due to multiple computers broadcasting simultaneously, actual transmission rates may degrade considerably. In addition, users need to be concerned about privacy when using a public Wi-Fi network. Since data is broadcast as a radio signal, it can be easily intercepted by anyone within range. Fortunately, Wi-Fi networks can be configured to encrypt the data sent between the computer and the router, thus making the data transmissions secure (see Chapter C7).

The range of Wi-Fi networks can vary, depending on the power of the radio transmitter in the router. Typically, a consumer-quality Wi-Fi router will broadcast 150-300 feet, enough to cover a house or building floor. A longer-range alternative to Wi-Fi is cellular networking. Smartphones and other devices communicate access the Internet/Web by sending and receiving radio signals from cellular towers, which bounce the signals to adjacent towers until they reach a routing station. The typical broadcast distance for a cell tower is 1-2 miles in urban areas and 15-25 miles in rural areas. Transmission speeds using the current 5G standard is typically 100-200 Mbits (million bits) per second, considerably slower than Wi-Fi.

Throughout the 1990s, the term "microprocessor" gradually was replaced with the more informal term "processor." Since all microprocessors developed for more than a decade had contained thousands or millions of transistors, the "micro" prefix became redundant and so was dropped. We will use the term "processor" in the rest of this chapter (and subsequent chapters) to refer to microprocessors in the 1990s and beyond.

✔ QUICK-CHECK 4.17: TRUE or FALSE: Accessing the Internet/Web via a cellular network is convenient but slower that a Wi-Fi connection.

Generation 6: ULSI+ & Artificial Intelligence (2006-Present)

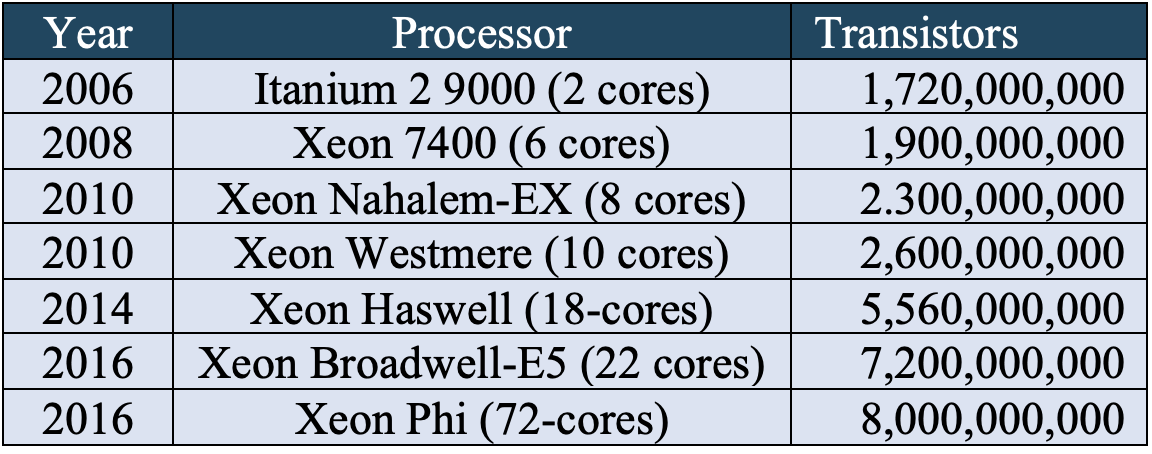

There is no clear consensus among historians as to when the Generation 5 ended (or even if it has ended). Following the pattern defined by Generations 4 and 5, we will define the start of Generation 6 to be the point at which manufacturing technology surpassed the billion-transistor threshold. The first billion-transistor processor, the Intel Itanium 2 9000 was released in 2006. FIGURE 17 shows how the number of transistors grew in the first decade of this new generation.

FIGURE 17. Numbers of transistors in Intel processors (2006-2016).

The processors listed in FIGURE 17 are mostly intended for supercomputers or other specialized computers. Typical processors for personal computers today will usually contain 5-10 billion transistors, although you can purchase a high-end Intel i9 processor with up to 26 billion transistors. The M1 and M2 processors released by Apple in 2020 and 2022, respectively, can have as many as 110-130 billion transistors.

If the idea that a single chip could hold billions of transistors is mind-boggling, it might be helpful to look at it from the other direction. Modern manufacturing technology can produce transistors that are 3 nanometers wide (a nanometer is one billionth of a meter). Since the width of a hair is approximately 80 micrometers wide (a micrometer is one millionth of a meter), you could fit 26,666 transistors across the width of a human hair. In contrast, the manufacturing technology used to create the first microprocessor, the Intel 4004, could only produce 10 micrometer transistors — more than 3,000% bigger!

The transistors counts in FIGURE 17 clearly do not exhibit the doubling behavior every 1-2 years predicted by Moore's Law. However, if we reinterpret Moore's prediction to refer to computing power as opposed to number of transistors, Moore's Law is alive and well. Note that the number of cores continue to increase. Doubling the number of cores will double the amount of work the processor can accomplish, regardless of the number of transistors. Of course, there is a cost as chip sizes increase in size as more cores are added.

Artificial Intelligence

One of the most exciting consequences of Generation 6 computers is the rise of Artificial Intelligence (AI) applications. The ability to fit billions of transistors on a single IC chip has led to computers that are faster and more powerful, which in turn has led to software that is able to learn and solve complex tasks that previously could only be done by humans. In fact, the processor with the highest transistor count (as of March 2024) is the Cerebras Wafer Scale Engine 3 (WSE-3), with 4 trillion transistors. The WSE-3 is an AI chip that powers the Cerebras CS-3 AI supercomputer.

AI applications driven by powerful processors are becoming integrated into our daily lives. Common uses of AI:

- Programs such as Apple's Siri and Amazon's Alexa can receive and respond to complex spoken commands, and through other smart technologies can control the temperature of your house or order groceries for delivery.

- Generative AI applications such as OpenAI's ChatGPT and Google's Gemini can write essays or create composite images at the user's request.

- Development tools such as GitHub Copilot and Microsoft Intellicode can assist a programmer by writing code for specific tasks.

- Facial recognition software is being used by law enforcement agencies to identify suspects in crowds, as well as by businesses to identify return customers in a store.

- Credit card companies build complex models of customer purchase habits to try and identify fraudulent purchases, and retailers like Amazon use your previous purchases to predict which products might be of interest to you in the future.

- Self-driving cars, also known as autonomous vehicles, combine video processing and advanced AI techniques to control vehicles with little to no driver assistance (FIGURE 18).

FIGURE 18. Uber self-driving car (Elgaland Vargaland/Wikimedia Commons).

The rapid growth of AI tools in the last decade is mainly attributed to advances in computing power, but the rise of cloud computing is another contributing factor. Cloud computing is a generic term for the use of remote computers to store information or provide computational tools. When you create a share a Google doc, you are utilizing cloud computing as the documents are stored on a Google server, as is the software for editing that document. Likewise, when you backup the photos on your iPhone to the iCloud, you are storing those images on an Apple server. All these remote servers that give users access to storage and software tools, are known collectively as the cloud. Since AI software tools are complex and require powerful computers to execute, it is simply not feasible for users to download these tools and run them on their own computers. Instead, the tools are stored on the cloud, where users can access and execute them remotely on powerful computers.

✔ QUICK-CHECK 4.18: TRUE or FALSE: The massive computing power obtained by processors with billions of transistors is fueling AI applications.

Chapter Summary

- The history of computers is commonly divided into generations, each with its own defining technology that prompted drastic improvements in computer design, efficiency, and ease of use.

- Generation 0 (1642-1943) is defined by mechanical computing devices, such as Pascal's calculator (1642), Babbage's Difference Engine (1821), and Aiken's Mark I (1944).

- The first programmable device was Jacquard's loom (1801), which used metal cards with holes punched into them to specify the pattern to be woven into a tapestry. Punch cards were subsequently used in Babbage's Analytical Engine (1833).

- An electromagnetic relay is a mechanical switch that can be used to control the flow of electricity through a wire. Relays were used to construct mechanical computers in the 1930s and 1940s.

- Generation 1 (1943-1954) is defined by vacuum tubes — small glass tubes from which most of the gas has been removed. As vacuum tubes have no moving parts, they enable the switching of electrical signals at speeds far exceeding those of relays.

- The first electronic (vacuum tube) computer was COLOSSUS (1943), built by the British government to decode encrypted Nazi communications. Since COLOSSUS was classified, the first publicly known electronic computer was ENIAC (1946), developed at the University of Pennsylvania.

- In the early 1950s, John von Neumann introduced the design of a stored-program computer — one in which programs could be stored in memory along with data. The von Neumann architecture continues to form the basis for nearly all modern computers.

- Generation 2 (1954-1963) is defined by transistors — pieces of silicon whose conductivity can be turned on and off using an electric current. Since transistors were smaller, cheaper, more reliable, and more energy-efficient than vacuum tubes, they allowed for the production of more powerful yet inexpensive computers.

- As computers became more affordable, high-level programming languages such as FORTRAN (1957), LISP (1959), and COBOL (1960) made it easier for non-technical users to program and interact with computers.

- Generation 3 (1963-1971) is defined by integrated circuits (ICs) — electronic circuitry that is layered onto silicon chips and packaged in metal or plastic. The ability to package transistors and related circuitry on mass-produced ICs made it possible to build computers that were smaller, faster, and cheaper.

- Moore's Law predicts that the number of transistors that can fit on a chip will double every 1 to 2 years.

- Generation 4 (1971-1989) is defined by the development of IC chips with thousands of transistors (known as very large scale integration or VLSI).

- A microprocessor (or just processor) is a single chip that contains all the circuitry for a computing device, such as a calculator or computer. The first microprocessor was the Intel 4004 (1971).

- The personal computer revolution occurred when technological advances allowed for the production of affordable, desktop computers, such as the MITS Altair 8800 (1975), Apple II (1977), and IBM PC (1981). The development of graphical user interfaces (GUIs) and the growth of the software industry, led by Microsoft, also made computers more accessible to non-technical users.

- The availability of personal computers increased demand for networking and led to the growth of the Internet in the 1980s.

- Generation 5 (1989-2006) is defined by the development of IC chips with millions of transistors (known as very ultra scale integration or ULSI).

- The increased speed and power of ULSI processors led to wireless and mobile computing.

- Generation 6 (2006-Present) is defined by the development of IC chips with billions of transistors (ULSI+).

- The incredible speed and processing power of ULSI+ processors has enabled the development of artificial intelligence applications such as digital assistants, generative writing tools, facial recognition, and self-driving cars.

Review Questions

- Mechanical calculators, such as those designed by Pascal and Leibniz, were first developed in the 1600s. However, they were not widely used in businesses and laboratories until the 1800s. Why was this the case?

- Jacquard's loom, although unrelated to computing, influenced the development of modern computing devices. What design features of that machine are relevant to modern computer architectures?

- What advantages did vacuum tubes provide over electromagnetic relays? What were the disadvantages of vacuum tubes?

- As it did with many technologies, World War II greatly influenced the development of computers. In what ways did the war effort contribute to the evolution of computer technology? In what ways did the need for secrecy during the war hinder computer development?

- What features of Babbage's Analytical Engine did von Neumann incorporate into his architecture? Why did it take over a century for Babbage's vision of a general-purpose, programmable computer to be realized?

- It was claimed that the ENIAC was programmable but programming it to perform a different task required rewiring and reconfiguring the physical components of the machine. Describe how the adoption of the von Neumann architecture allowed subsequent machines to be more easily programmed to perform different tasks.

- What is a transistor, and how did the introduction of transistors lead to faster and cheaper computers? What other effects did transistors have on modern technology and society?

- What is a microprocessor, and how did the introduction of microprocessors lead to faster and cheaper computers?

- What was the first personal computer and when was it first marketed? How was this product different from today's PCs?

- Describe two innovations introduced by Apple Computer in the late 1970s and early 1980s.

- In addition to the examples already given, describe two other examples of artificial intelligence applications, i.e., programs that produce "intelligent" behavior to solve complex tasks.

- Describe the challenge in maintaining the trend in processor manufacturing predicted by Moore's law.

- Each generation of computers resulted in machines that were cheaper, faster, and thus accessible to more people. How did this trend affect the development of programming languages?

- Two of the technological advances described in this unit were so influential that they earned their inventors a Nobel Prize in Physics. Identify the inventions and inventors.